Bots – They Are Among Us

What is a bot?

Generally speaking, “bots” are autonomous applications, which perform some type of predetermined task, automatically and repeatedly. Typically, bots are computer programs that run on the Internet, autonomously, collecting and/or distributing information (and sometimes, misinformation).

Bots can be useful and harmless to users in general, but they can also be used in an abusive and unethical way to spread fake news, and even by criminals, to collect private information from people.

Internet traffic is full of bots

A survey by Imperva found that, in 2016, bots accounted for more than 50% of total Internet traffic. The search was based on 17 billion views of approximately 100,000 different random pages. Of that amount, the study found that 29% were malicious bots, while 23% were useful bots. The remainder, about 48% were visualized by humans.

A simple example of how this technology facilitates digital life can be seen on social media timelines. If timelines were not automated, to update it, users would have to visit each page, group or friend to find out about the latest photos, news and posts.

A “robot” controls a mechanism that feeds the News Feed. It can be understood as a bot, able to understand some of your preferences, and able to search, on various sites, for news that may interest you. All in a very short time, which would be impractical for a real person.

The search platforms, Google, Bing, etc., also use bots to scour the Internet continuously, to discover new sites and add them to the search index. This process involves following the links that these sites carry, determining what type of content they promote, whether they are safe or not, among other things.

Crimes, risks and fraud

Many bots are used for unethical or criminal purposes. Among these purposes, we have: (1) searching for vulnerable sites that could be hacked to steal private information or install viruses; (2) attacks for the purpose of taking down the site; (3) disseminating false information on the internet and social networks (the controversial Fake News ); (4) impersonating humans and artificially following and spreading comments on social networks.

A very frequent example of malignant bots are the so-called DDoS attacks, where the culprits launch an enormous number of robots to flood a certain page – or Internet service – with accesses and requests, in such a way that the site is unable to keep up with the demand and it goes down as a result.

Another type of malicious bot aims to detect vulnerabilities. This type of robot roams the sites, testing each page for classic vulnerabilities. If the bot detects that the site is vulnerable, it tells the hacker who controls it, and the criminal can act, invading and causing damage, such as the interception of passwords and other personal data.

There is also the bot used in cases of phishing. This type of robot is capable of deceiving inattentive and unprotected users, causing them to visit a fake website, visually identical to the original, as a way of trying to obtain personal data, such as logins, passwords, bank credentials, etc.

Bots spreading fake news

Researchers from Indiana University, USA, published a study which analyzed 14 million messages shared on Twitter focusing on the American presidential elections, between May 2016 and May 2017.

The study found evidence that bots disproportionately participated in the spreading of fake news, especially as the initial broadcasters, before the message went viral. According to the survey, only 6% of all Twitter accounts identified as bots were responsible for 31% of the total fake news carried on that social network, reaching thousands of users in less than 10 seconds.

Giovanni Ciampaglia, one of the study’s coauthors, explained that people tend to trust more those messages that appear to have been forwarded by a large number of people. Bots capitalize on this trust, making messages look popular, and luring humans to help spread them, without verifying their authenticity.

Corroborating these results, another study by MIT researchers, demonstrated that false information spreads “farther, faster, more deeply and more widely than the truth in all categories of information”. The study also found that robots accelerate the distribution of true and false news, but that humans spread more false news than real news.

The big problem, however, is the difficulty in preventing and fighting harmful bots. With each new step aiming at hindering the automatic profile creation by a robot, its creators modify the tool and expand its capabilities to bypass new captcha mechanisms and other security instruments.

Bot Sentinel Tracks Trollbot Activities

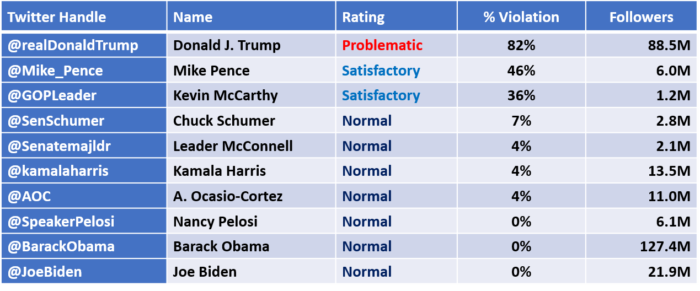

Bot Sentinel is an advanced system that uses artificial intelligence to classify suspicious profiles on Twitter. The goal is to detect trollbots, which are accounts controlled by humans whose purpose is to disseminate false or hate messages, hoping that such messages can go viral, and artificially affect the political discourse and the perception of users on certain subjects. According to Bot Sentinel, it analyzes several hundred tweets per account, and the more an account engages in behavior that is violating Twitter rules, the higher their Bot Sentinel rating is. The range goes from Normal (0% to 24% violations), Satisfactory (25% to 49%), Disruptive (50% to 74%), and the worst, Problematic (75% to 100%).

As of December 27, 2020, Bot Sentinel had classified more than 314,000 as Disruptive or Problematic accounts on Twitter (trollbots). Disruptive or Problematic accounts often engage in malicious or dangerous tweet activity that harass or can be harmful to others, spam hashtags and share disinformation. About 25%, of more than 1.5 million Twitter accounts classified by Bot Sentinel, are Disruptive or Problematic.

We queried the Bot Sentinel site for the ratings given to the Twitter accounts of some top US politicians. Here are the results:

Twitter positioning

Twitter claims to be working continuously to detect bots that distribute large numbers of tweets and try to manipulate trending topics. Twitter suspends suspicious accounts and suspends the use of its APIs to automate the sending of messages when there is abuse.

Despite Twitter’s efforts, there are studies showing that many bots are not yet detected by Twitter, or it takes a long time to detect them.

Living with Bots

The fact is that bots with unethical or criminal purposes will not stop, despite the continued efforts of Twitter and other platforms. The use of bots for political purposes is a fact worldwide . The race between detecting bots and developing increasingly “invisible” bots does not seem to have a winner in the short term. The best solution to this problem will always be the human capacity to ponder and investigate what is being shared, and to do it rationally, not emotionally or with spurious interests.

Cover image Designed by upklyak / Freepik .

Odysci

Related posts